Last Update:

Visual Studio slow debugging and _NO_DEBUG_HEAP

Table of Contents

Verify you assumptions about tools you use!

Some time ago I was tracing a perf problem (UI code + some custom

logic). I needed to track what module was eating most of the time in one

specific scenario. I prepared release version of the app and I added

some profiling code. I’ve used Visual Studio 2013. The app used

OutputDebugString so I needed to run the debugging (F5) in order to be

able to see logs in the output window (I know I know, I could use

DebugView as well…)

But, my main assumption was that when I run F5 in release mode, only a

little performance hit would occur. What was my astonishment when I

noticed it was a wrong idea! My release-debug session pointed to a

completely different place in the code…

Note: this article relates to Visual Studio up to VS2013, in VS2015 debug head is fortunately disabled by default.

Story continuation

What was wrong with the assumption? As it appeared when I was starting

the app with F5, even in release mode Visual Studio is attaching a

special debug heap! The whole application runs slower, because every

system memory allocation gets additional integrity checks.

My code used win32 UI and thus every list addition, control creation was

double checked by this special heap. When running using F5 the main

bottleneck seemed to be happening in that UI code. When I disabled the

additional heap checking (or when I simply run my application without

debugger attached) the real bottleneck appeared in a completely

different place.

Those kind of bugs have even their name Heisenbug, those are bugs that disappear (or are altered) by tools that are used to track the problem. As in our situation: debugger was changing the performance of my application so I was not able to find a real hot spot…

Let’s learn from the situation! What is this debug heap? Is it really useful? Can we live without it?

Example

Let’s make a simple experiment:

for (int iter = 0; iter < NUM_ITERS; ++iter)

{

for (int aCnt = 0; aCnt < NUM_ALLOC; ++aCnt)

{

vector<int> testVec(NUM_ELEMENTS);

unique_ptr<int[]> pTestMem(new int[NUM_ELEMENTS]);

}

}

Full code located here: fenbf/dbgheap.cpp

The above example will allocate (and delete) memory

NUM_ITERS x NUM_ALLOC times.

For NUM_ITERS=100 and NUM_ALLOC=100 and NUM_ELEMENTS=100000

(~400kb per allocation) I got

Release mode, F5: 4987 milliseconds

Release mode, running exe: 1313 milliseconds

So by running using F5, we get ~3.7 slower memory allocations!

Let’s compare calls stacks:

To prepare the above images I run the app using F5 and I paused at

random position. There were lots of allocations, so I usually entered

some interesting code. Of course, producing the second view (without F5)

was a bit harder, so I set a breakpoint using _asm int 3

(DebugBreak() also would work), then I got debugger attached so I

could also pause at random. Additionally, since the second version runs

much faster, I needed to increase number of allocations happening in the

program.

Running with F5 I could easily break in some deep allocation method (and

as you can see there is a call to

ntdll.dll!_RtlDebugAllocateHeap@12 ()). When I attached debugger (the

second call stack) I could only get into vector allocation method (STD).

Debug Heap

All dynamic memory allocation (new, malloc, std containers, etc, etc…)

at some point must ask system to allocate the space. Debug Heap adds

some special rules and ‘reinforcements’ so that memory will not be

corrupt.

It might be useful when coding in raw C winApi style (when you use raw

HeapAlloc calls), but probably not when using C++ and CRT/STD.

CRT has its own memory validation mechanisms (read more at msdn) so windows Debug Heap is doing additional, mostly redundant checks.

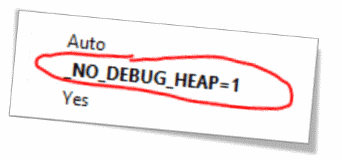

Options

What can we do about this whole feature? Fortunately, we have an option to disable it!

Any drawbacks of this approach?

Obviously there is no additional checking… but since you’ve probably checked your app in Debug version, and since there are additional checks in CRT/STD no problems should occur.

Also, in the latest Visual Studio 2015 this feature is disabled by default (it is enabled in the previous versions). This suggests that we should be quite safe.

On the other hand, when you rely solely on WinAPI calls and do some advanced system programming then DebugHeap might help…

Summary

Things to remember:

Use “_NO_DEBUG_HEAP” to increase performance of your debugging sessions!.

As I mentioned in the beginning, I was quite surprised to see so different results when running F5 in release mode VS running the app alone. Debugger usually adds some performance hit, but not that huge! I can expect a slow down in a debug build, but not that much in release version of the application.

Debug Heap is attached every time: in debug builds and in release as well. And it’s not that obvious. At least we can disable it.

Fortunately Debug Heap is disabled by default in Visual Studio 2015 - this shows that MS Team might be wrong when they enabled Debug Heap by default in the previous versions of Visual Studio.

Resources

- ofekshilon.com: Accelerating Debug Runs, Part 1: _NO_DEBUG_HEAP - detailed information about this feature

- VC++ team blog: C++ Debugging Improvements in Visual Studio 2015

- preshing.com: The Windows Heap Is Slow When Launched from the Debugger

- informit.com: Advanced Windows Debugging: Memory Corruption Part II—Heaps

- msdn blogs: Anatomy of a Heisenbug

I've prepared a valuable bonus if you're interested in Modern C++!

Learn all major features of recent C++ Standards!

Check it out here:

Similar Articles:

- PDB Was Not Found - Linker Warning

- 3 Tools to Understand New Code from Visual Assist

- C++ status at the end of 2013

- C++ at the end of 2012

- MP option in Visual Studio