Last Update:

Revisiting An Old Benchmark - Vector of objects or pointers

Table of Contents

Around one and a half year ago I did some benchmarks on updating objects allocated in a continuous memory block vs allocated individually as pointers on the heap: Vector of Objects vs Vector of Pointers. The benchmarks was solely done from scratch and they’ve used only Windows High Performance Timer for measurement. But, since recently I’m interested in more professional benchmarking libraries it would be good to revisit my old approach and measure the data again.

Intro

Just to recall we try to compare the following cases:

std::vector<Object>- memory is allocated on the heap butstd::vectorguarantees that the memory block is continuous. Thus, iterations that use those objects should be quite fast.std::vector<std::shared_ptr<Object>>- this simulates array of references from C#. You have an array, but each element is allocated in a different place in the heap.

Or visually, we compare:

VS

Each particle is 72bytes:

class Particle

{

private:

float pos[4];

float acc[4];

float vel[4];

float col[4];

float rot;

float time;

size = sizeof(float)*18 = 72

Additionally, we need to take into account address randomization. It appears that if you create one pointer after another they might end up quite close in the memory address space. To mimic real life case we can randomize such pointers so they are not laid out consecutively in memory.

My last results, on older machine (i5 2400) showed that pointers code for 80k of objects was 266% slower than the continuous case. Let’s see what we get with new machine and new approach…

New tests are made on

- Intel i7 4720HQ, 12GB Ram, 512 SSD, Windows 10.

Using Nonius library

In Nonius we can use a bit more advanced approach and use chronometer parameter that might be passed into the Benchmark method:

NONIUS_BENCHMARK("Test", [](nonius::chronometer meter) {

// setup here

meter.measure([] {

// computation...

});

});

Only the code marked as //computation (that internal lambda) will be

measured. Such benchmark code will be executed twice: once during the

estimation phase, and another time during the execution phase.

For our benchmark we have to create array of pointers or objects before the measurement happens:

NONIUS_BENCHMARK("ParticlesStack", [](nonius::chronometer meter)

{

vector<Particle> particles(NUM_PARTICLES);

for (auto &p : particles)

p.generate();

meter.measure([&particles] {

for (size_t u = 0; u < UPDATES; ++u)

{

for (auto &p : particles)

p.update(DELTA_TIME);

}

});

and the heap test:

NONIUS_BENCHMARK("ParticlesHeap", [](nonius::chronometer meter)

{

vector<shared_ptr<Particle>> particles(NUM_PARTICLES);

for (auto &p : particles)

{

p = std::make_shared<Particle>();

}

for (size_t i = 0; i < NUM_PARTICLES / 2; ++i)

{

int a = rand() % NUM_PARTICLES;

int b = rand() % NUM_PARTICLES;

if (a != b)

swap(particles[a], particles[b]);

}

for (auto &p : particles)

p->generate();

meter.measure([&particles] {

for (size_t u = 0; u < UPDATES; ++u)

{

for (auto &p : particles)

p->update(DELTA_TIME);

}

});

});

Additionally I got the test where the randomization part is skipped.

Results

Nonius performs some statistic analysis on the gathered data. When I run my tests using 10k particles, 1k updates I got the following output:

- Particles vector of objects: mean is 69ms and variance should be ok.

- Particles vector of pointers: mean is 121ms and variance is not affected by outliers.

- Particles vector of pointers but not randomized: mean is 90ms and the variance is also only a little disturbed.

The great thing about Nonius is that you don’t have to specify number of runs and iterations… all this is computed by Nonius. You just need to write a benchmark that is repeatable.

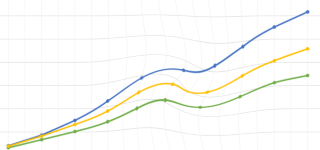

And the generated chart:

Interesting thing is when I run the same binary on the same hardware, but with just battery mode (without power adapter attached) I got slightly different data:

For all our tests the variance is severely affected, it’s clearly visible on the chart below:

Of course, running benchmarks having on battery is probably not the wises thing… but Nonius caught easily that the data is highly disturbed.

Unfortunately I found it hard to create a series of benchmarks: like when I want to test the same code but with different data set. In our particles example I just wanted to test with 1k particles, 2k…. 10k. With Nonius I have to write 10 benchmarks separately.

Using Celero library

With the Celero library we might create a bit more advanced scenarios for our benchmarks. The library has thing called ‘problem space’ where we can define different data for benchmarks. The test code will take each element of the problem space and run benchmark again. This works perfectly for particles test code: we can easily test how algorithm performs using 1k of particles, 2k… 10k without writing code separately.

First of all we need to define a fixture class:

class ParticlesFixture : public celero::TestFixture

{

public:

virtual vector<pair<int64_t, uint64_t>> getExperimentValues() const override

{

vector<pair<int64_t, uint64_t>> problemSpace;

const int totalNumberOfTests = 10;

for (int i = 0; i < totalNumberOfTests; i++)

{

problemSpace.push_back(make_pair(1000 + i * 1000, uint64_t(0)));

}

return problemSpace;

}

};

The code above returns just a vector of pairs {1k, 0}, {2k, 0}, … {10k, 0}. As you can see we can even use it for algorithms that uses two dimensional data range…

Then we can define fixture classes for the final benchmarks:

class ParticlesObjVectorFixture : public ParticlesFixture

{

public:

virtual void setUp(int64_t experimentValue) override

{

particles = vector<Particle>(experimentValue);

for (auto &p : particles)

p.generate();

}

/// After each run, clear the vector

virtual void tearDown()

{

this->particles.clear();

}

vector<Particle> particles;

};

and vector of pointers, randomized or not:

class ParticlesPtrVectorFixture : public ParticlesFixture

{

public:

virtual bool randomizeAddresses() { return true; }

virtual void setUp(int64_t experimentValue) override

{

particles = vector<shared_ptr<Particle>>(experimentValue);

for (auto &p : particles)

p = make_shared<Particle>();

if (randomizeAddresses())

{

// randomize....

}

for (auto &p : particles)

p->generate();

}

/// After each run, clear the vector

virtual void tearDown()

{

this->particles.clear();

}

vector<shared_ptr<Particle>> particles;

};

then the version without randomization:

class ParticlesPtrVectorNoRandFixture : public ParticlesPtrVectorFixture

{

public:

virtual bool randomizeAddresses() { return false; }

};

And now the tests itself:

BASELINE_F(ParticlesTest, ObjVector, ParticlesObjVectorFixture, 20, 1)

{

for (size_t u = 0; u < UPDATES; ++u)

{

for (auto &p : particles)

p.update(DELTA_TIME);

}

}

BENCHMARK_F(ParticlesTest, PtrVector, ParticlesPtrVectorFixture, 20, 1)

{

for (size_t u = 0; u < UPDATES; ++u)

{

for (auto &p : particles)

p->update(DELTA_TIME);

}

}

BENCHMARK_F(ParticlesTest, PtrVectorNoRand, ParticlesPtrVectorNoRandFixture, 20, 1)

{

for (size_t u = 0; u < UPDATES; ++u)

{

for (auto &p : particles)

p->update(DELTA_TIME);

}

}

quite simple… right? :)

Some of the code is repeated, so we could even simplify this a bit more.

Results

With this more advanced setup we can run benchmarks several times over different set of data. Each benchmark will be executed 20 times (20 measurements/samples) and only one iteration (in Nonius there was 100 samples and 1 iteration).

Here are the results:

The values for a given benchmark execution is actually the min of all samples.

We get similar results to the data we get with Nonius:

- for 10k particles:

ObjVectoris around 66ms,PtrVectoris 121ms andPtrVectorNoRandis 89ms

Celero doesn’t give you an option to directly create a graph (as

Nonius), but it can easily output csv data. Then we can take it and use

a spreadsheed to analyze it and produce charts.

Here’s the corresponding graph (this time I am using mean value of of

gathered samples).

In the generated CSV there are more data than you could see in the

simple Console table.

There are:

* Group,

* Experiment,

* Problem Space

* Samples

* Iterations

* Baseline us/Iteration

* Iterations/sec

* Min (us)

* Mean (us)

* Max (us)

* Variance

* Standard Deviation

* Skewness

* Kurtosis

* Z Score

By looking at the data you can detect if your samples got a proper distribution or if they were disturbed. When I run Celero binary in battery mode then I could spot the difference between AC mode. So we can detect the same problems of our data as we’ve noticed with Nonius.

Summary

With this post I wanted to confirm that having a good benchmarking library is probably better that your own simple solution. Libraries like Nonius are easy to use and can pick strange artefacts in the results that might be invisible using just a stopwatch approach. With Celero we get even more flexibility and benchmarks can be executed over different range of data.

See my previous post about those benchmarking libraries: Micro benchmarking libraries for C++

Source code available on githib: github/fenbf/benchmarkLibsTest

I've prepared a valuable bonus if you're interested in Modern C++!

Learn all major features of recent C++ Standards!

Check it out here: